I’ve just realised something.

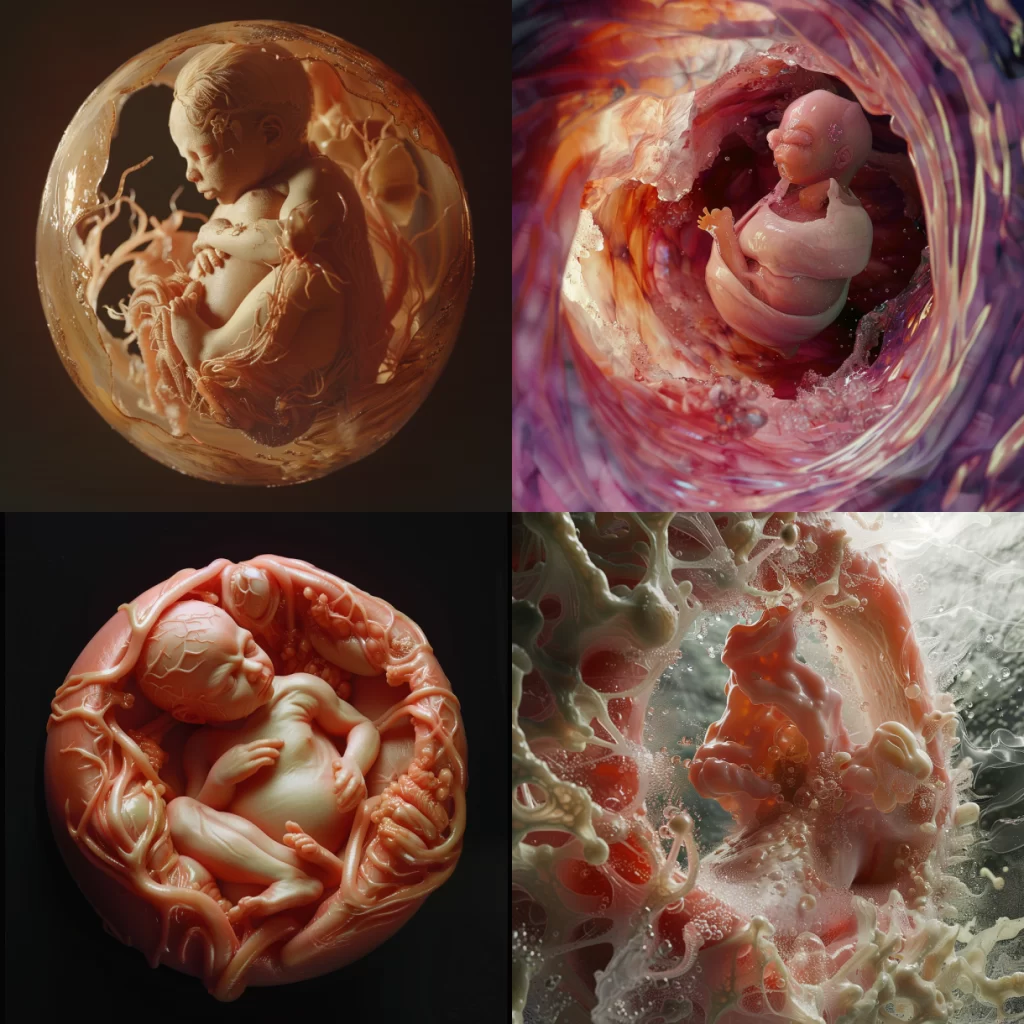

The model I have been using to create this project is similar to the process of asking AI to generate imagery for me.

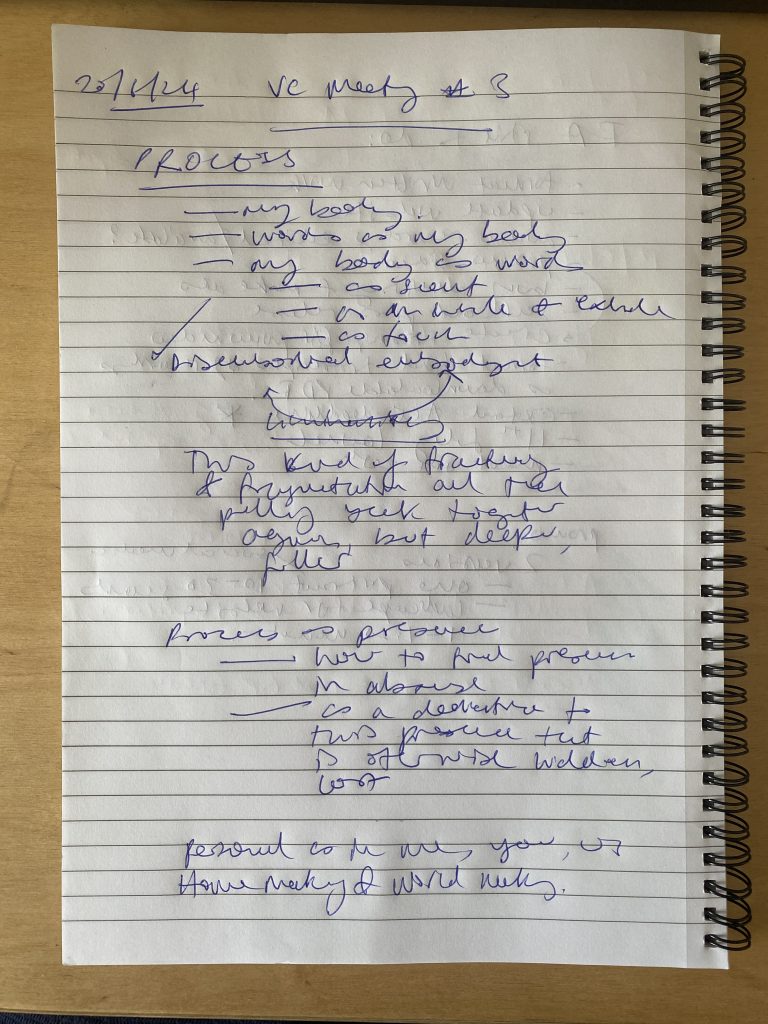

I am using a prompt to ask people living with chronic conditions to generate imagery about what their womb looks like.

The prompt is this central question: If your womb were a place you could visit, what would it look like?

The process for this project then asks the participants to generate abstract imagery in their brains about what their womb would look like if it were a place. They then communicate that to me via the written or spoken word.

I am then taking that response and turning it into a prompt for AI, allowing algorithms to generate digital imagery of what the participants were describing to me. I’m only now realising that I’ve sort of acted like an intermediary between the participant and Midjourney.

If I’m asking the participants a prompt that triggers them to generate imagery, am I treating them the same way as an AI algorithm? Is that ethical? These are all questions that are important to consider when working with people who are expressing intimate ideas about their bodies.