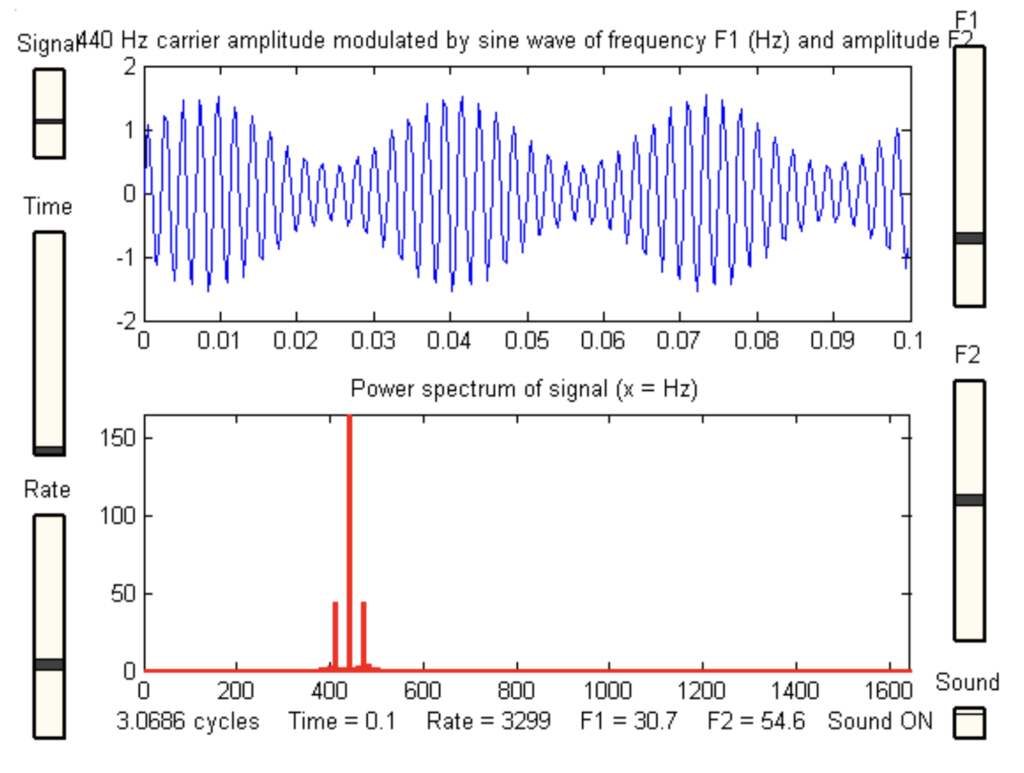

I’ve started experimenting with some sound for the work. Here’s a little sneak preview…

Image Description: A spectral, bouncing waveform in pale lilac shakes with the sound, on a black background. It’s like a hundred extremely thin horizontal lines zigzagging across the screen, slowly changing formation.

Audio Description: An echoey hum pulses in and out, as if a hundred people with the same voice are all humming the same thing at the same time in an underground cave. A kind of haunting wind flows throughout.