The modern AI, most prominently represented by the Large Language Models (LLMs), prompts a fundamental question: Does it contain consciousness? To pose the question another way, the original wellspring of the AI concept is found where brain scientists, computer scientists and mathematicians began to explore if consciousness itself could be understood as a mathematical or computational process, as a system. This inquiry delves into whether today’s advanced automation is merely sophisticated mimicry or a genuine step towards the sentient machines envisioned by pioneers of the field. As Noam Chomsky openly criticises the GPT model as a fake intelligence, a copycat only. Or is there an even deeper question: is there any form of computing that can capture the differences between intelligence, awareness and consciousness? Or we simply don’t understand our kind. Those three words are just a game of our language, a misconception; they never exist.

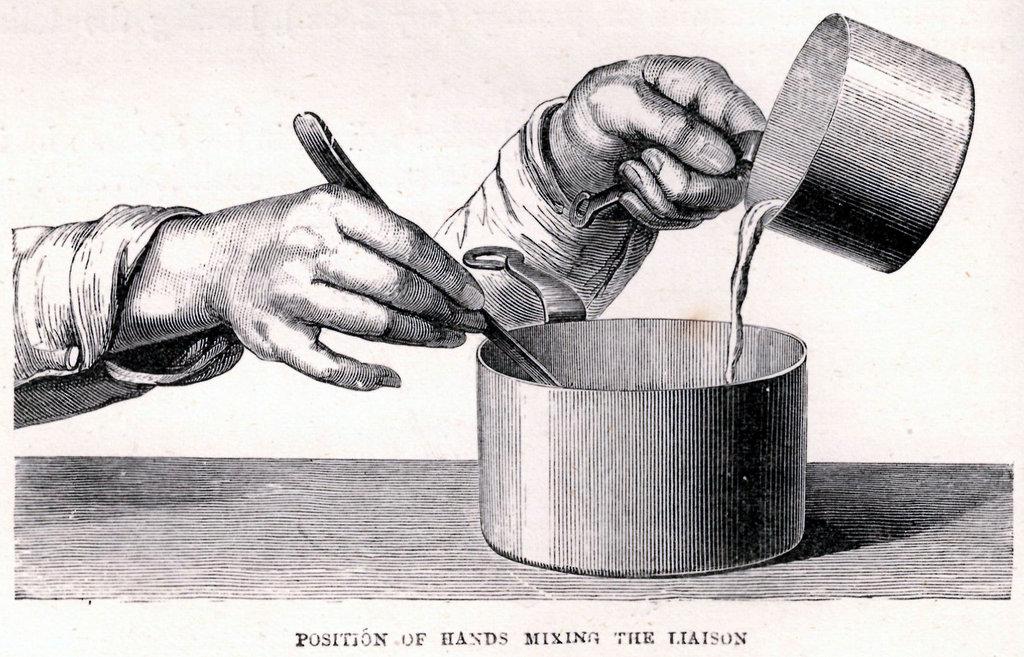

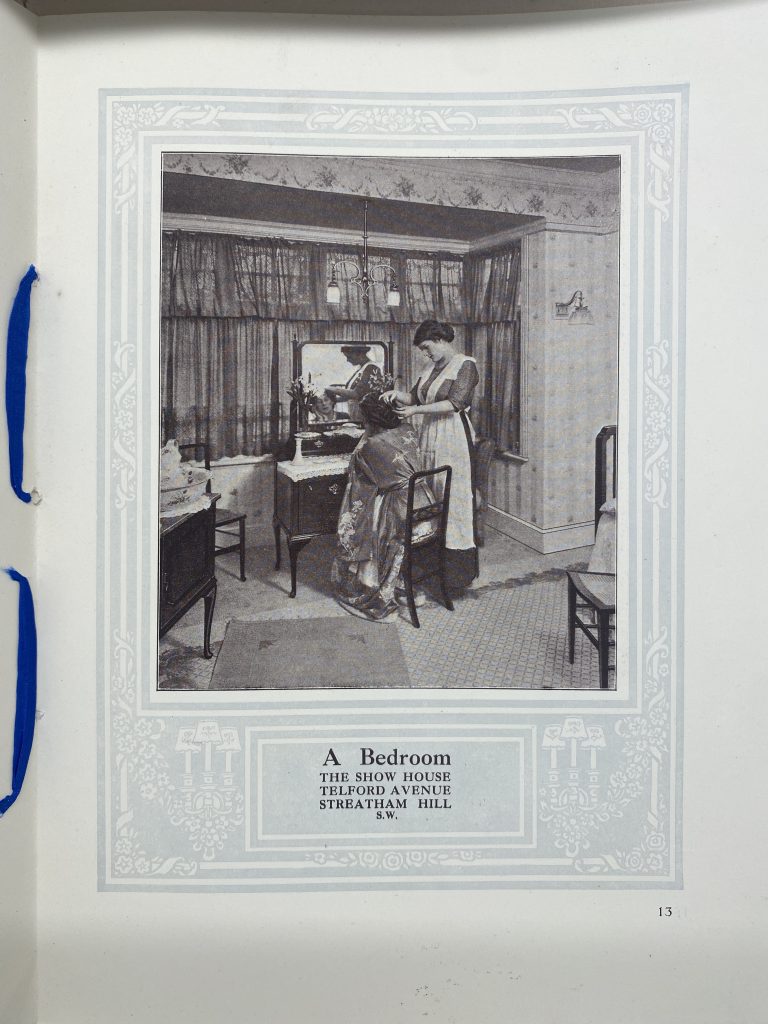

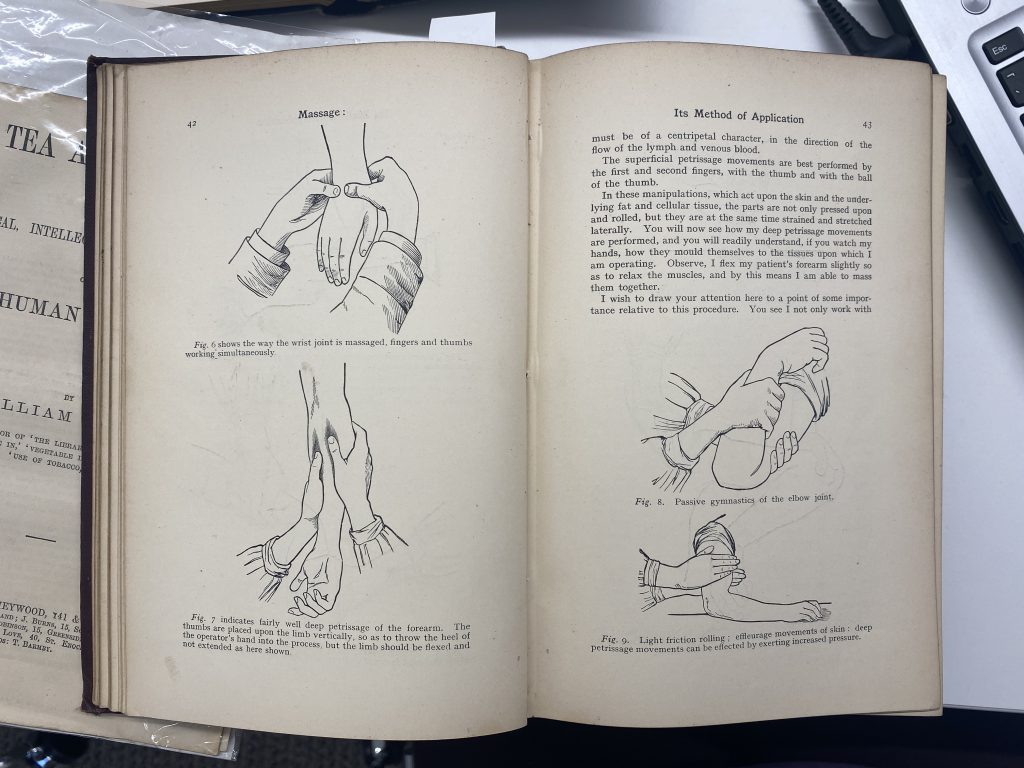

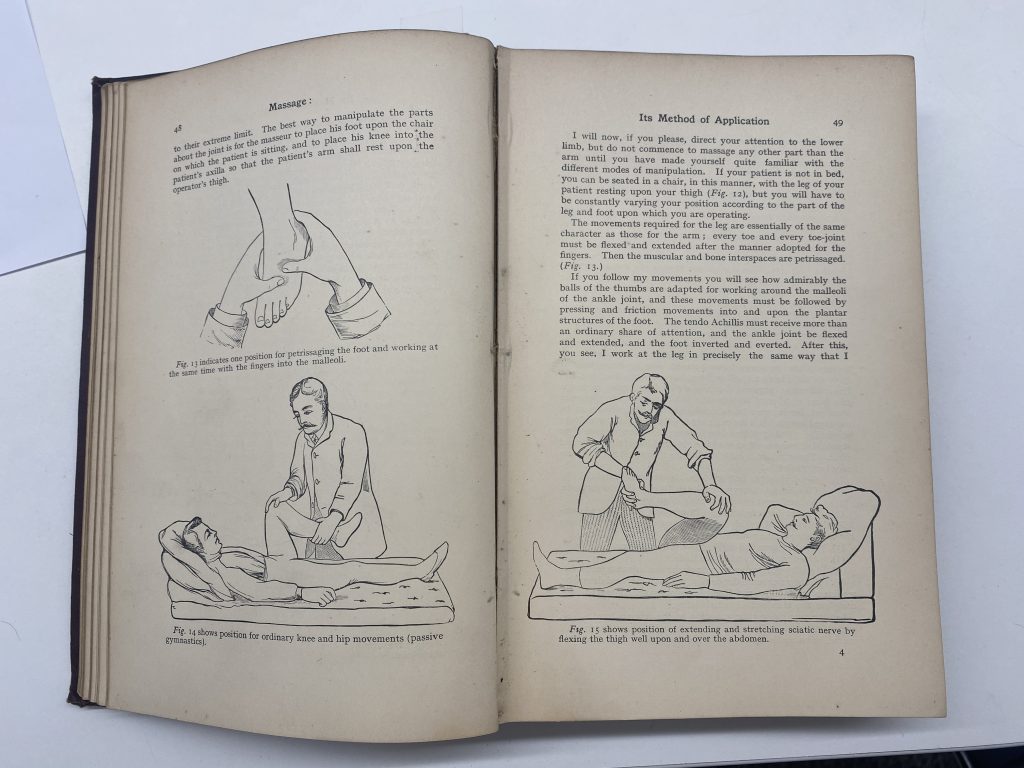

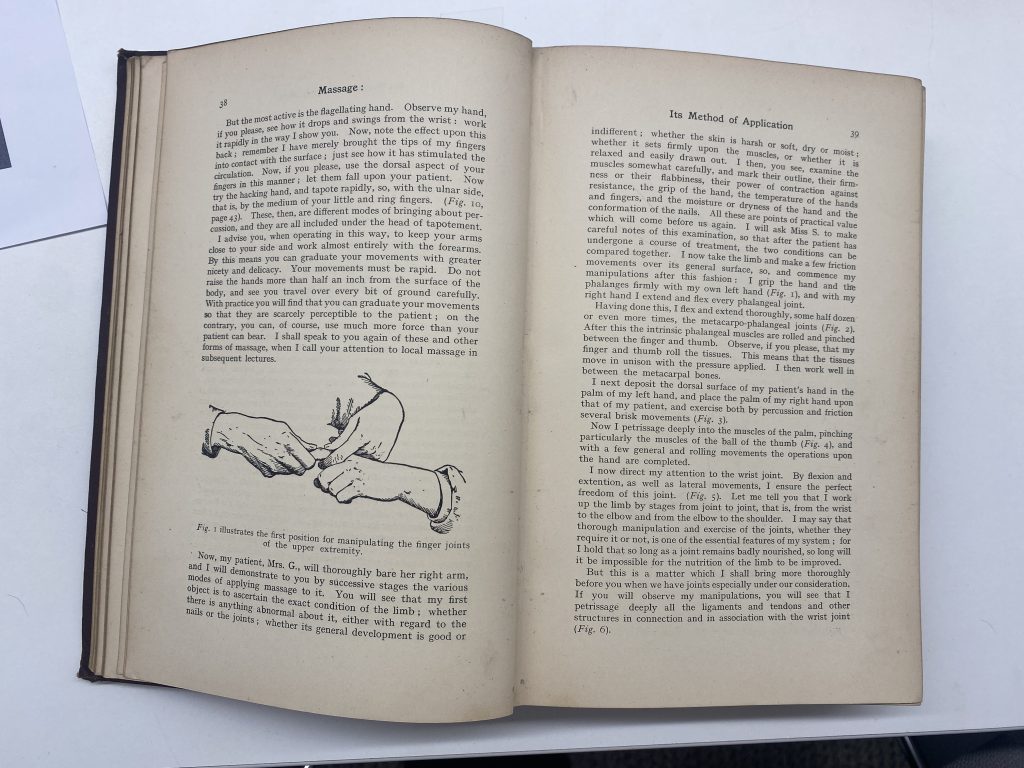

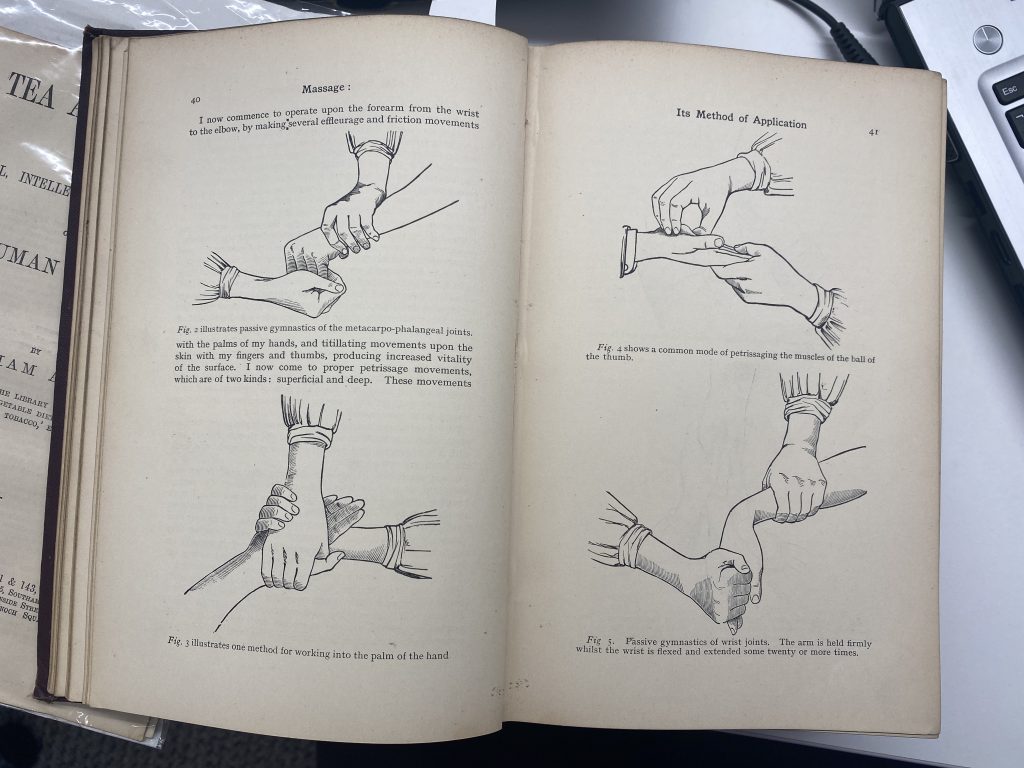

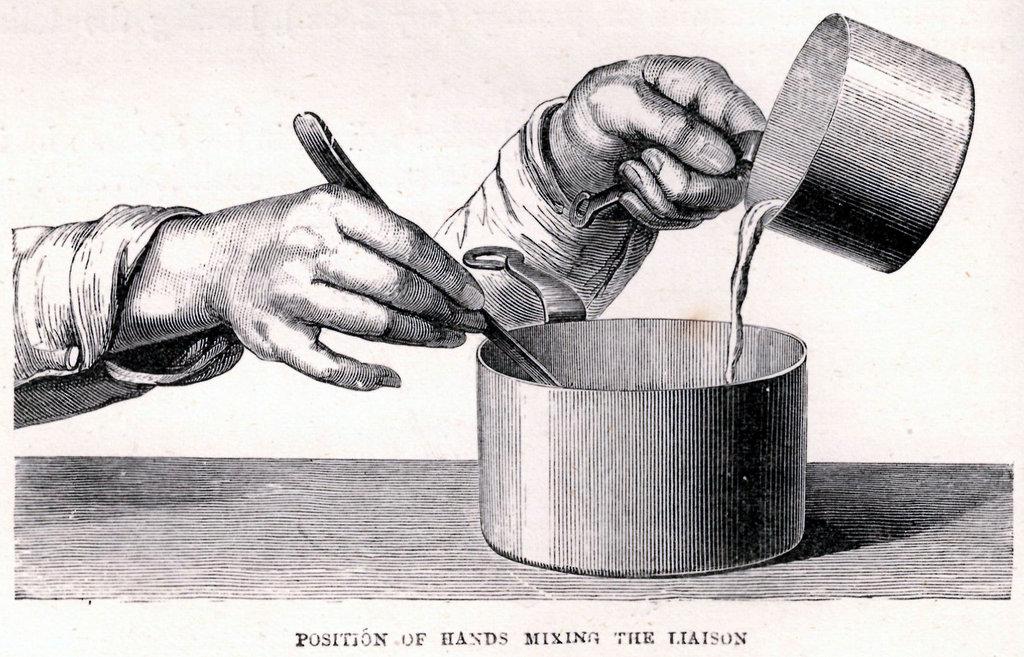

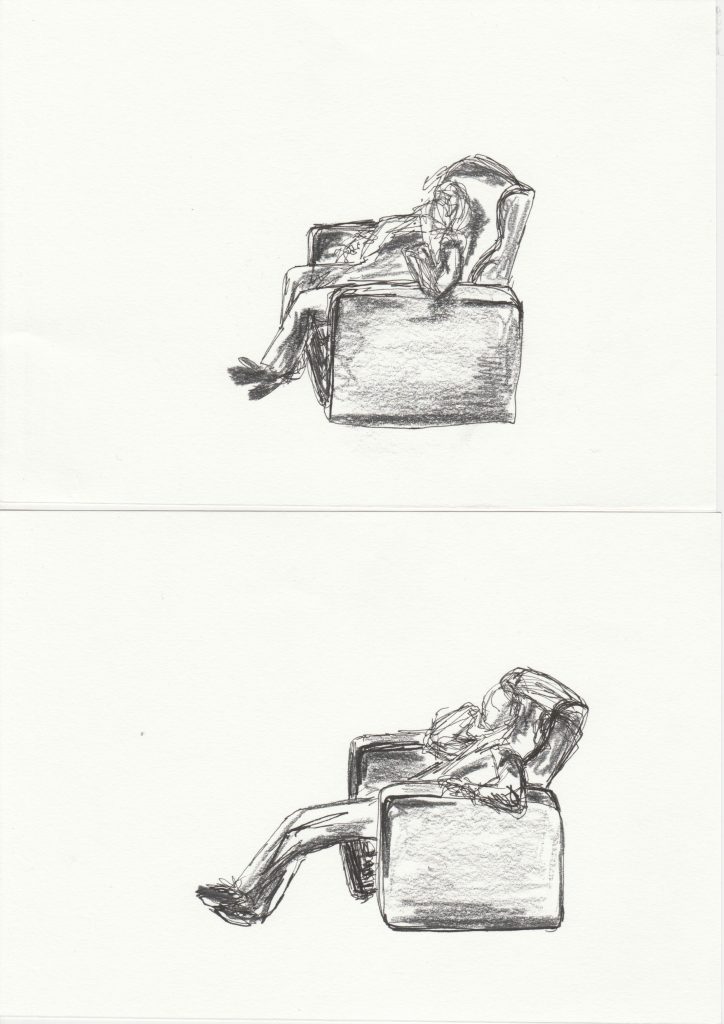

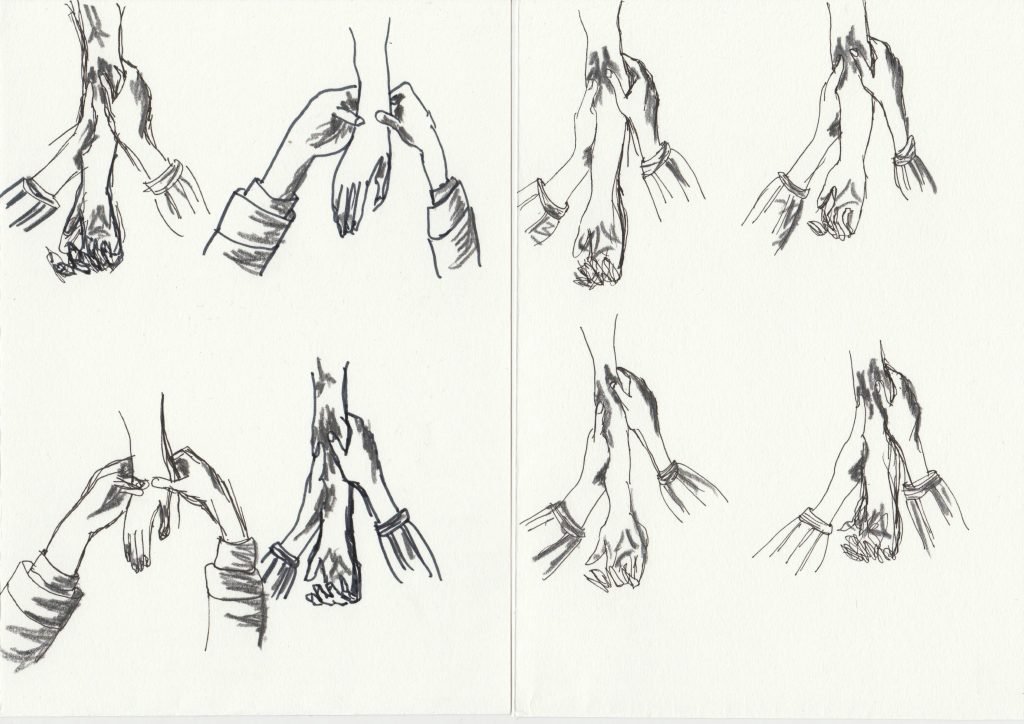

Since my last post, I decided I wanted try and find more instructional images. I was really averse to going online and doing some simple searches as I thought they wouldn’t lead me to anything interesting so I made a plan to try out my local library. I hoped to magically be drawn to something but it didn’t quite work out like that.

I looked through books on health and drawing but the images and illustrations were a bit too… anatomical. I found one on stretches featuring people photographed at every stage of the movement and maybe it’s that the images were too active.

I will revisit this post again tomorrow because there may be more to add after I’ve slept on it. It may be that I need to reluctantly make use of online search engines too.

Still from Zeitgeist (2007)

This film was circulating the internet when I was a teenager. It’s a conspiracy theory-style documentary that connects recurring themes throughout many of the world’s most prominent religions, some still practiced, some not. We passed it between us like contraband, thinking we’d been given a clear-headed, logical way to understand much of the totality of human history. I mean, we had been given a logic, but it was off.

Aside from being fundamentally irrelevant to the actual point of religion, many of its claims are totally factually incorrect. For one thing they equate ‘the sun’ with ‘the son’ (referring to Horus, Jesus, and other figures) because said out loud, they’re the same word. In English. Which didn’t exist five thousand years ago. A quick check of the Aramaic and they don’t seem so related…

The sun | שמש | “shmash”

The son | בר | “bar”

But now I worry I’m falling into an ancient rabbit hole. Can a deep debunking of a conspiracy theory become apophenic? I have to stop.

I find myself thinking about the warped, low-res audio and image of this film, and of the layered diagrams and crafted depictions of deities. It’s an aesthetic that I think is still with us, if a little updated. I’m very much holding it in mind as I work on this piece.

I’ve started experimenting with some sound for the work. Here’s a little sneak preview…

Image Description: A spectral, bouncing waveform in pale lilac shakes with the sound, on a black background. It’s like a hundred extremely thin horizontal lines zigzagging across the screen, slowly changing formation.

Audio Description: An echoey hum pulses in and out, as if a hundred people with the same voice are all humming the same thing at the same time in an underground cave. A kind of haunting wind flows throughout.

Since the start of the residency, I’ve had these flashbacks to making in 2020. The Zooms and online uploads and sharing screens. These are all embedded in our daily lives now but the exclusivity of it in this residency really reminds me of that time. I think perhaps some clues about how to approach this month might lie in those flashbacks.

I thought back to work I had made then and thoughts I had about my practice and how it changed. There was something about being able to work entirely from my immediate surroundings (home) or my inner thoughts and memories. For my time here, I would really like to draw on that. It feels like a gentler way of working?

I went back to some drawings from 2022 which were my quick interpretations of instructional images. I always found it tricky to explain what I liked about them or their function within my practice. Fast forward to last night and I think it finally clicked (better late than never!) that my translation of them into drawing seems to soften them and give them a tenderness which would hopefully say something about how those actions feel?

This is at the core of what I’m working on.

Jenn Ashworth proposes the idea of apopheny vs epiphany in her 2019 memoir Notes Made While Falling. In the book, she describes a traumatic birth followed by a mental and physical unravelling. Part of this is apophenia – a kind of logic that connects patterns; names; numbers; anything, which consensus reality would say is not actually connected. Most often it’s applied as a pathology, often in reference to conspiracy theories. Jenn says ‘I can’t stop connecting things’, and rather than self-pathologising, sets this beside her religious background to think about this experience of apopheny alongside the experience of epiphany – the realisation of a profound and real truth. But how do you know which is happening within you?

This idea has been on my mind since I read the book (and reread it, and reread it), and it’s the heart of the work I’m making for Vital Capacities. The work I make here will also feed into a solo show of mine opening early next year, so it’s really good to be able to delve in deeply in this public way – a rare chance to be able to share the intricacies of a looottt of research, experimentation, and thought.

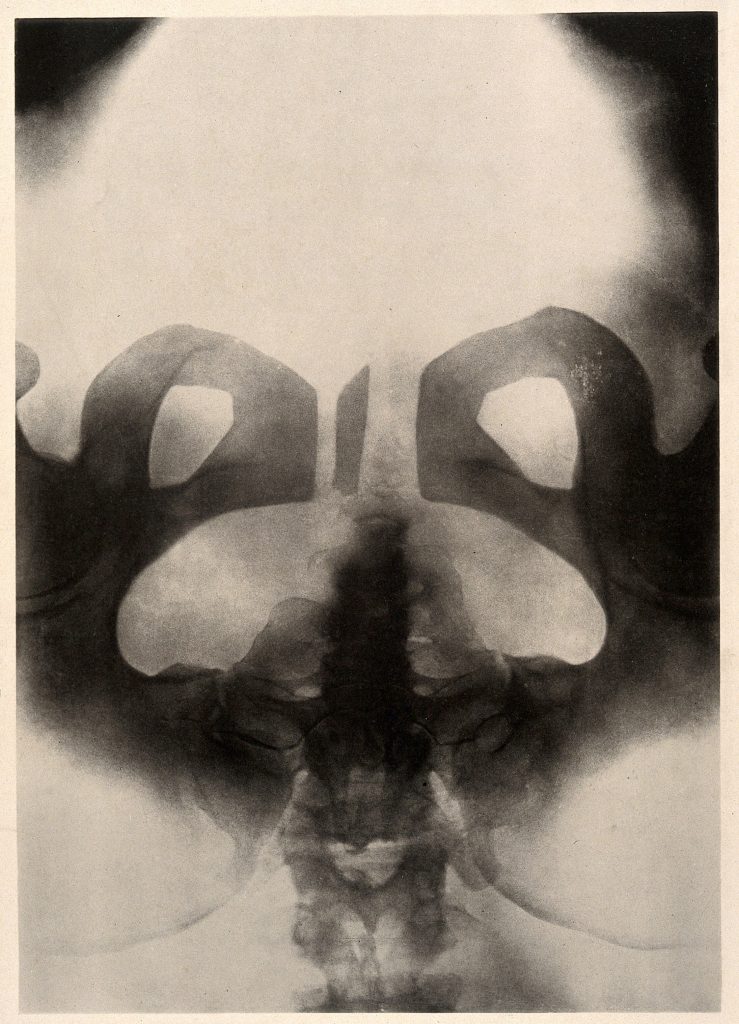

Image Credit: This image is featured on the front cover of ‘Notes Made While Falling’ More info: A woman’s pelvis after a pubiotomy – to widen the birth canal for a vaginal delivery of a baby. Collotype by Römmler & Jonas after a radiograph made for G. Leopold and Th. Leisewitz, 1908.

Image Description: It’s a ‘collotype’, which basically looks like a fuzzy x-ray, of a pelvis. Smudgy black and white, it’s not immediately obvious to non-medically trained eyes what we’re looking at, but after a while it seems that it’s upside-down, with the little tail bone sticking upwards, hips curling inwards.

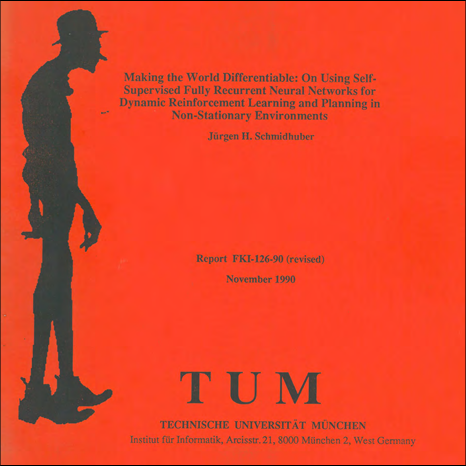

The contemporary world is becoming increasingly influenced by artificial intelligence (AI) models, some of which are known as ‘world models’. While these concepts gained significant attention in 2025, their origins can be traced much further back. Jürgen Schmidhuber introduced the foundational architecture for planning and reinforcement learning (RL) involving interacting recurrent neural networks (RNNs)—including a controller and a world model—in 1990. In this framework, the world model serves as an internal, differentiable simulation of the environment, learning to predict the outcomes of the controller’s actions. By simulating these action-consequence chains internally, the agent can plan and optimise its decisions. This approach is now commonly used in video prediction and simulations within game engines, yet it remains closely related to cameras and image processing.

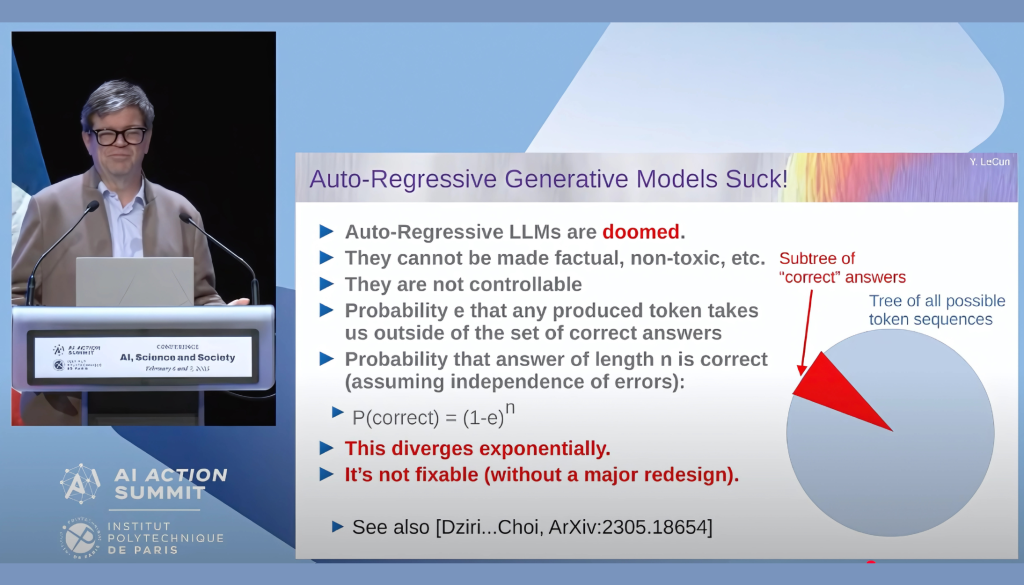

Despite the advancements made, a fundamental limitation first identified in 1990 continues to challenge the progress: the problem of instability and catastrophic forgetting. As the controller guides the agent into new areas of experience, there is a risk that the network will overwrite previously learned knowledge. This leads to fragile, non-lifelong learning, where new information erases older representations. Furthermore, Prof. Yann LeCun mentioned in his presentation ‘The Shape of AI to Come’ at the AI Action Summit’s Science Days at the Institut Polytechnique de Paris (IP Paris) that the volume of data that a large language model contains remains minimal compared to that of a four-year-old child’s data volume, as 1.1E14 bytes. One of the titles of his slides that has stayed in my mind is “Auto-Regressive Generative Models Suck!” In the area of reinforcement learning, the AI’s policies often remain static, unable to adapt to the unforeseen complexities of the real world — in other words, the AI does not learn after the training process. Recently, emerging paradigms like Liquid Neural Networks (Ramin Hasani) and Dynamic Deep Learning (Richard Sutton) attempt to address this rigidity. However, those approaches are still highly reliant on randomly selecting and cleaning a neural network inside, to maintain the learning dynamic and potentially improve real-time reaction and long-term learning. Nevertheless, they are still facing challenges in solving the problem of AI’s hallucinations. A fundamental paradigm shift for AI is needed in our time, but it takes time, and before that, this paradigm may already be overwhelming for both machines and humans.

Photographed by YEUNG Tsz Ying

Hi, welcome to my online space. My name is Lazarus Chan, and I am a new media artist now based in Hong Kong.

My work crosses the intersections of science, technology, the humanities, and art, and I am particularly fascinated by themes of consciousness, time, and artificial intelligence. Much of my work is related to generative art and installations, posing questions from a humanistic and philosophical standpoint. I will create a new work, “Stochastic Camera (version 0.4) – weight of witness”, in August 2025, and you can follow my progress here.

This studio is an online space for me to continue my explorations and share my thoughts with you. I will write a series of posts, sharing my ongoing reflections on AI as it becomes more integrated into our world.

You are welcome to leave a comment and send me questions.

Lazarus Chan

More about the photo:

https://www.lazaruschan.com/artwork/golem-wander-in-crossroads

Hi, I’m Leah Clements, an artist from and based in East London.

I’m really interested in moments of transcendence – these might be near death or out of body experiences, or profound shifts in psychology or physicality. Sometimes this is very scientific, sometimes it veers towards the paranormal. I want to get to these moments where you have one foot in this world and the other in another.

A lot of this comes from being chronically ill myself, but this point of departure usually expands outwards into other people’s experiences, with an intent to re-collectivise.

During the residency I’m planning to work on a new moving image scene where icons from ancient, medieval, and contemporary sites will come together to forge a new symbol. This is growing out of my research into apopheny vs epiphany (more on that later!) and thinking about how we look for meaning when it feels lacking, and whether we can know if we’re looking for it in the right or wrong places. It will give me a chance to try (and play about with) a new technique of animation in film editing.

Comment any thoughts you have, or ask me questions – I look forward to sharing some of my research into this and talking with you about it…

Hi, I’m Elora Kadir and welcome to my studio. I’m an artist based in London, working across installation, drawing, photography, video, and found objects. My work centres around lived experience with disability, and the subtle ways it interacts with the world around us—whether that’s through navigating buildings, dealing with forms, or the quiet tensions that arise from systems designed for able-bodied norms.

During this residency, I’m planning to explore moments of dissonance – those small, often overlooked points where body and environment don’t quite align. I’m interested in how access (or lack of it) gets embedded into the everyday, and how art can make these frictions visible. I’ll be experimenting with materials, images, and texts to reflect on these encounters. I’m also curious as to how it will feel to be a part of this virtual space and what influence that may have (if any!) on my process.

Feel free to have a look around and leave a comment or message.

Some more text here. Some more text.

I’m excited to be part of this residency and to have the opportunity to focus, reflect, and share my process in a more open way than I typically allow.

Over the next few weeks, I’ll be using this time and space to explore how repetition, memory, and digital tools intersect — particularly how small acts of making can accumulate into something slower, quieter, and more intentional.

My practice often shifts between design and more contemplative visual work, and I’m interested in what happens when the usual boundaries blur — when something functional begins to feel poetic, or when a mistake reveals a new direction.

This residency is as much about paying attention as it is about producing. I’ll be sharing fragments, trials, and thoughts along the way.

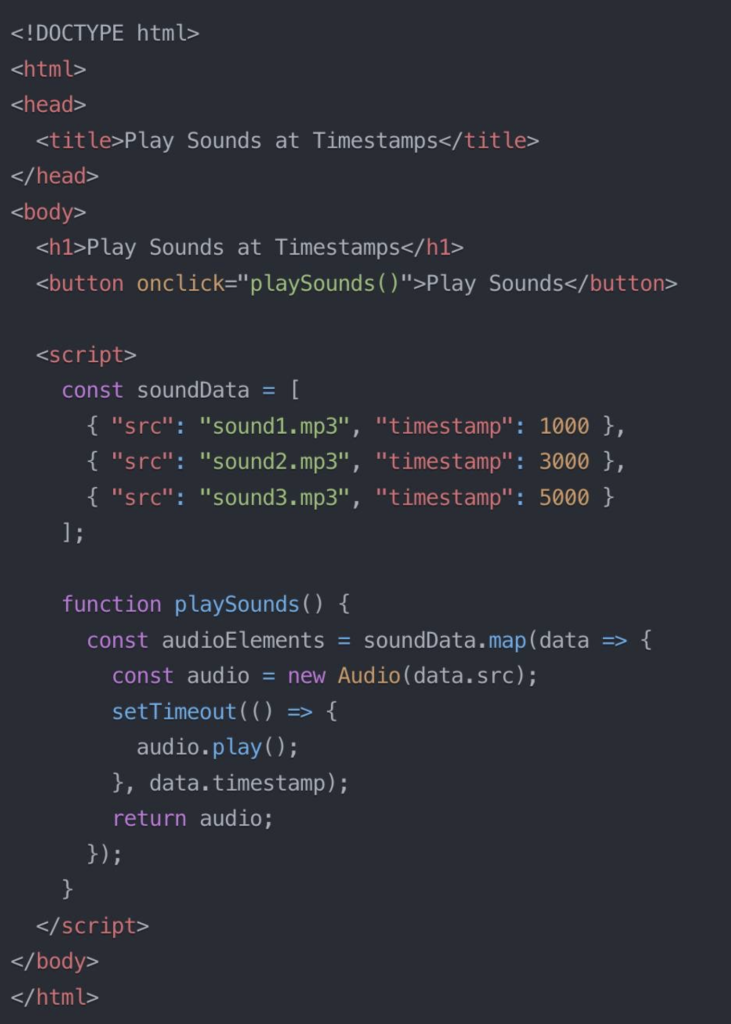

Hello! So this has been super cool and fun, hope you find it fun too! I’ve been working with JavaScript to make sure we are playing sounds at the exact right times!

Imagine you have two different sound patterns:

To make this work, we need to play these sounds back at just the right moments. We can use a bit of JavaScript magic to play sounds at specific timestamps. Check out this script:

Our machine learning model first identifies when each sound occurs which we then use to export the timestamps to create our soundData array. Using JavaScript, we can now play the sounds exactly when the AI model hears them! Yay! ???

Here’s an accessible version of the code you can copy and paste if you’d like to give it a go yourself!

Click here if you want to copy and paste the code to try out for yourself! Show code

<!DOCTYPE html>

<html>

<head>

<title>Play Sounds at Timestamps</title>

</head>

<body>

<h1>Play Sounds at Timestamps</h1>

<button onclick="playSounds()">Play Sounds</button>

<script>

const soundData = [

{ "src": "sound1.mp3", "timestamp": 1000 },

{ "src": "sound2.mp3", "timestamp": 3000 },

{ "src": "sound3.mp3", "timestamp": 5000 }

];

function playSounds() {

const audioElements = soundData.map(data => {

const audio = new Audio(data.src);

setTimeout(() => {

audio.play();

}, data.timestamp);

return audio;

});

}

</script>

</body>

</html>

I thought about Polly Atkin’s poem Unwalking (referenced in my last post) a lot when i first returned back to my allotment plot in January 2022, having not visiting the site for 2 years due to shielding. We had been given formal notice by the allotment committee to either improve the plot or end our tenancy. I was in the midst of an intensely difficult period in my life, and I was unsure of whether the commitment was possible to continue. i was trying to figure out having a career as an artist whilst being sick, and how to do those things in a sustainable way that doesn’t just leave you burnt out. I believe that this figuring out will be a lifelong mission, one that never has a fixed answer. I still go through periods (just very recently in fact), where it feels like living in this body feels truly incompatible with a career as an artist. But what did become clear when returning to my allotment in 2022, was that in rediscovering my gardening practice, I could do something more than just survive my body and my job; i could build something bigger, something beyond myself. I decided to give myself 6 weeks and to see what might happen…

This was the first photo i took of what the plot looked like when I first returned back in January 2022. It was such a special afternoon. it was a weekday and I had been working, and my mum had asked if I wanted to go to the plot just to have a look. I was reluctant. part of having an energy-limiting condition means that i never know when i am over exerting myself, and i am always second guessing myself as to whether or not the thing i did is what made my pain worse. It’s a particularly challenging aspect of living with sickness, and something I find really hard within the context of a career. So re-engaging with the allotment again on a normal working day felt pretty extreme; simply leaving the house and turning up felt like i was pushing my boundaries of what was possible (it always does). But that afternoon, i felt the spark of what has always drawn me to gardening, and amongst all the overgrown weeds and debris, i felt excited to think what might be possible here.

Polly’s poem Unwalking was in my head a lot as we began grappling with how to go about using the space again. It became clear quite quickly, that the only way to manage the space at this point – whilst existing in crip-time – was to cover most of it up. So that’s what the first year was spent doing; taking things down and very slowly mulching and covering the beds. We began by adopting a no-dig approach by placing cardboard over the beds, then covering them in a mulch of compost or manure.

This mulching process was so exciting; it felt like i was making these large scale collages with muck and cardboard. Again, scale becomes a really exciting component of what draws me to this gardening practice. I love feeling in awe of bigness, to feel like i’m in the presence of something much, much bigger than me. I think i’m always searching (even in the smallest of artworks i make) for the feeling i get when i’m next to a huge lump of gritstone rock at Curbar Edge, my local rock face in the Peak District National Park. It’s the same feeling i get when i experience Wolfgang Tillmans work in the flesh, where the bigness just carries me away across landscapes and into another space. When mulching and covering the beds at the allotment, all of a sudden it moved beyond an ordinary gardening task and became a kind of space-making.

I often describe my work building the rose garden (and maintaining an allotment plot in general) as totally absurd; trying to get my sick and tired body to sculpt this huge space simply feels a bit ridiculous when met with what that space demands of my very limited energy. It feels like i’m being asked to hold the space up as if it were some kind of giant inflatable shape, and all i have are my tired arms to try and keep it from falling over and rolling away. Sometimes it feels like the chanting at a football match, the way the chorus from the crowd at one and the same time feel both like a buoyant wave of singing and a crash of noise imploding; always on the edge of collapse. And I do have help. it would be impossible to do it without it, and wrong of me not to clarify this essential component of my access to this practice. And even with this, the task at hand still feels enormous. But i think that might be part of what fuels the work in this way. This whole existence – enduring/living/loving through sickness – is absurd. It’s an outrageous request that is demanded of our bodies, of our minds, of our spirit. But I think i’m interested in what happens when I sit with sickness, hold hands with it, move through this world by its side instead of operating from a place of abandon or rejection or cure. I want to hold myself holding sickness, and find the vast landscapes within upon which to settle.

After heavily mulching the cardboard we then covered the beds in black plastic sheeting to block out all light, and allow the weeds to rot down into the soil ready for planting in the autumn. This really enhanced the sense that i was working with a kind of collage. The beds immediately looked like covered up swimming pools, and i loved playing with the various allotment debris that we had gathered to weigh down the sheeting. This whole process took up the entire first year of the work we did on the plot. There was little to no “proper” gardening (as in sowing/planting/cultivating) in that first year. And yet, I was there, I was at home dreaming about it, I was making something, committing time and energy to a place with a hope to emerge into a future. All the components of a garden were present; I was ungardening.

Despite the plot now looking significantly different to that first year, i am still ungardening. As with everything that is allowed to work on crip-time, ungardening facilitates whole ways of experiencing the garden that would otherwise be lost. Ungardening allows for me to keep my body at the centre of my gardening practice, and for the garden to exist beyond me. rather than a singular space, the garden becomes a shifting, interconnected ground of thinking and growing and imagining and living and dying. more than anything, ungardening reminds me that the garden is made for made for my absence, and my absence holds more than a missing body.

Let’s get one thing straight! ☝?

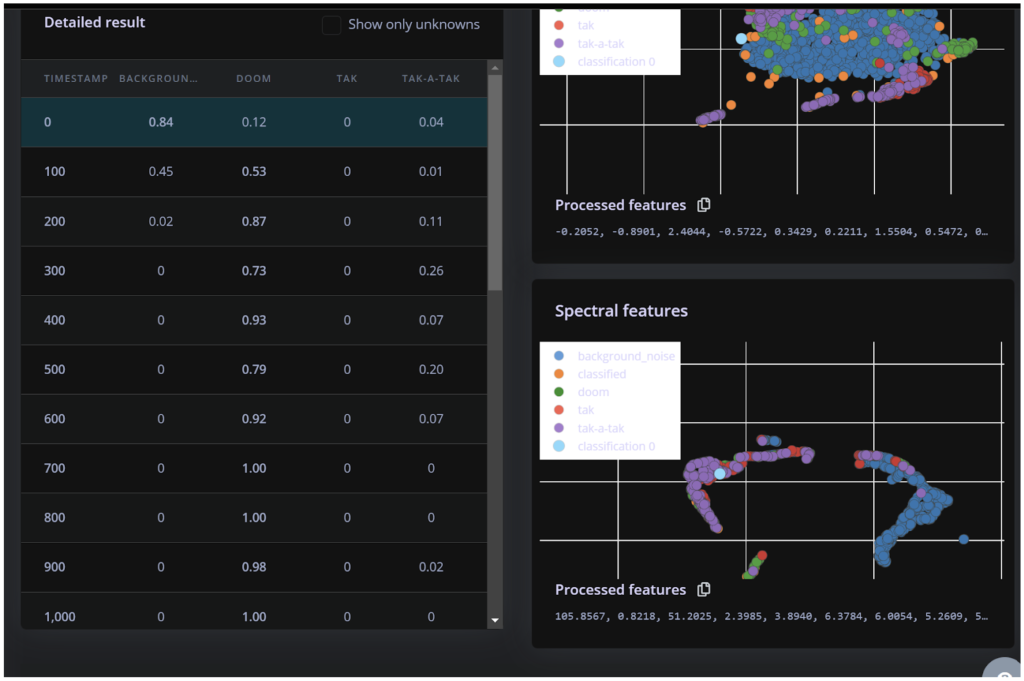

Doom (1-second pause) Doom ?️⏳⏳?️

??♀️ is a DIFFERENT sound from

Doom (half a second pause) Doom ?️⏳?️

I need to play those back using real instruments at the right time. To achieve this, I have to do two things:

Today, I want to chat about the first part. ?

Once I run my machine learning model, it recognises and timestamps when sounds were performed. You can see this in the first photo below. The model shows the probabilities of recognising each sound, such as “doom”, “tak”, or “tak-a-tak”. ?

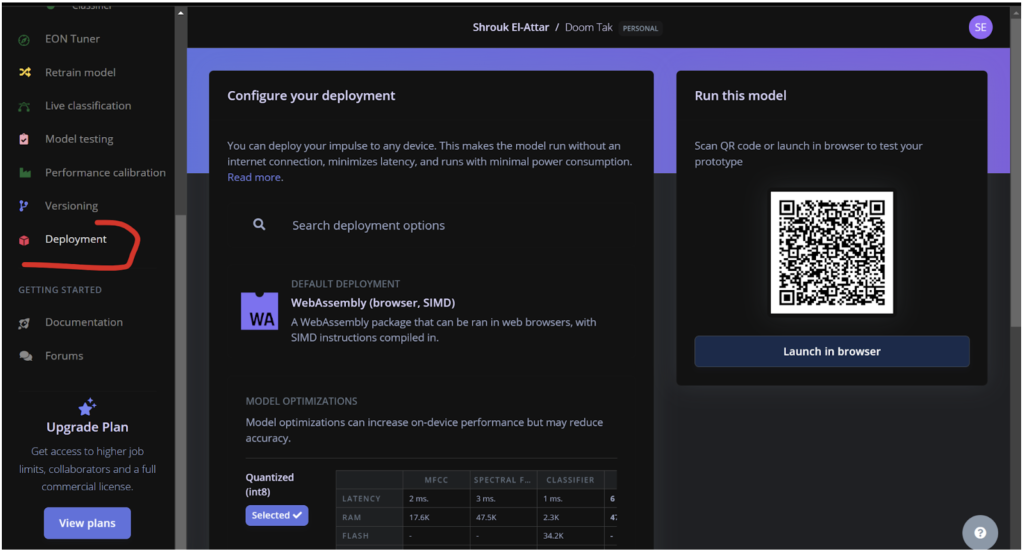

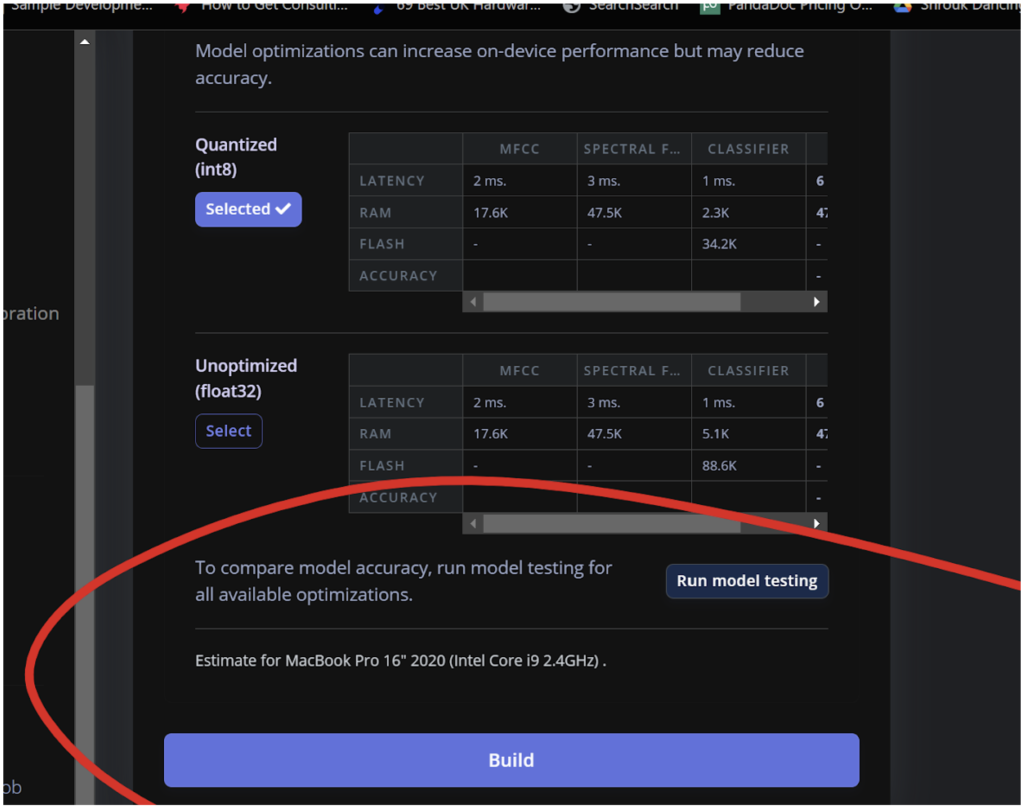

Next, I need to export this model as a WebAssembly package so it can run in a web browser. This allows anyone to interact with my online doom/tak exhibition! ?

In the second photo, you can see the deployment section where I configure the WebAssembly package. This makes the model run efficiently in web browsers without an internet connection, minimising latency.

Exporting the model is straightforward. Once exported, it generates a JavaScript model that can be used on a webpage. Now, I can call this entire machine learning model from a web app script to do whatever I want.

Easy peasy lemon squeezy! ?

https://chatgpt.com/share/cd501025-b4bc-43ce-a160-4c6a9bdc05ec

Yeah, so let’s do it in a different way. Let’s group it in, let’s just all of us trying to have a group chat with GPT. I just turn on the ChatGPT so we can have a conversation and then let’s see how is the capability. So, hey, GPT, we are a group of four people, five people. Yeah, and we are trying to experience or practice the creative process during our discussions. So, you can jump in to discuss anytime, but we may have several initial conversations or some ideas, and if you find it is a good time to step in, just tell me. All right, yeah, you can say, okay, if you’re ready.

ChatGPT

Okay, I’m ready! Let’s start your creative process discussion. Whenever you have an idea or want to bounce something off me, just let me know.

I think it is, um…

ChatGPT

It sounds like you’re still gathering your thoughts. What’s the main topic or project you’re working on today?

Yeah, today we have…

ChatGPT

Got it, you’re deciding on today’s focus. Are you thinking about brainstorming new ideas, planning a project, or something else?

Feel free to join. I mean, our friend is in a Zoom meeting, so they are trying to understand how is the capability of the AI tools, and I think this is a very handy way to demonstrate the creative process. But I would like to invite my friend to join our conversation too. Well, maybe you can tell how they can step in.

ChatGPT

Sure! Your friend can join the conversation at any time by simply sharing their thoughts or questions. If they have a specific idea or topic in mind, they can mention it, and we can discuss it together. Feel free to jump in whenever you’re ready!

Okay, maybe I just present my idea to my friend. I would like to invite ChatGPT as my companion and record my daily life, and it will become a diary or my personal journey. This journey will be a reference that creates a fiction character. For instance, it will be an instant me as a character, and then I will use this character as a… I will offer several backgrounds, let’s say it’s a sci-fi background or cyberpunk background or fantasy background, and it will fit in, become the main character of the story. What do you feel?

ChatGPT

That sounds like a fantastic and creative idea! Using ChatGPT to help record your daily life and then transforming that into a fictional character in various settings could make for a rich and engaging story. Do you have a specific background in mind to start with, like sci-fi, cyberpunk, or fantasy? Or would you like some help exploring different ideas?

Or could you give me several variations about the background of the story?

ChatGPT

Absolutely, here are a few variations you might like:

Sci-Fi Background: Your character lives in a futuristic city on Mars, where society is divided between the elite who live in towering glass skyscrapers and the working class who inhabit underground colonies. They uncover a secret about an impending alien invasion and must rally both classes to defend their home.

Cyberpunk Background: Set in a neon-lit megacity where mega-corporations control every aspect of life, your character is a hacker who discovers a conspiracy that could topple the corporate regime. They navigate the city’s dark alleys and high-tech surveillance systems to gather allies and fight for freedom.

Fantasy Background: In a world where magic is real but controlled by a powerful guild, your character is an ordinary person who stumbles upon a hidden magical artifact. This discovery thrusts them into a battle between the guild and a rebellion seeking to make magic accessible to all.

Post-Apocalyptic Background: After a global catastrophe, society has collapsed into scattered, warring factions. Your character is a scavenger who finds a relic of the old world that holds the key to rebuilding civilization. They must navigate dangerous territories and form uneasy alliances to achieve their goal.

Steampunk Background: In an alternate Victorian-era world powered by steam and clockwork, your character is an inventor who creates a machine that can change the course of history. They are pursued by secret societies and must travel across continents to protect their invention.

Do any of these settings inspire you?

Yeah, thank you. But I would like to take a pause here because I will jump back to the discussion with my friend and see how they feel.

ChatGPT

Of course! Take your time to discuss with your friend. When you’re ready to continue or if you need more ideas, feel free to let me know. Enjoy your conversation!

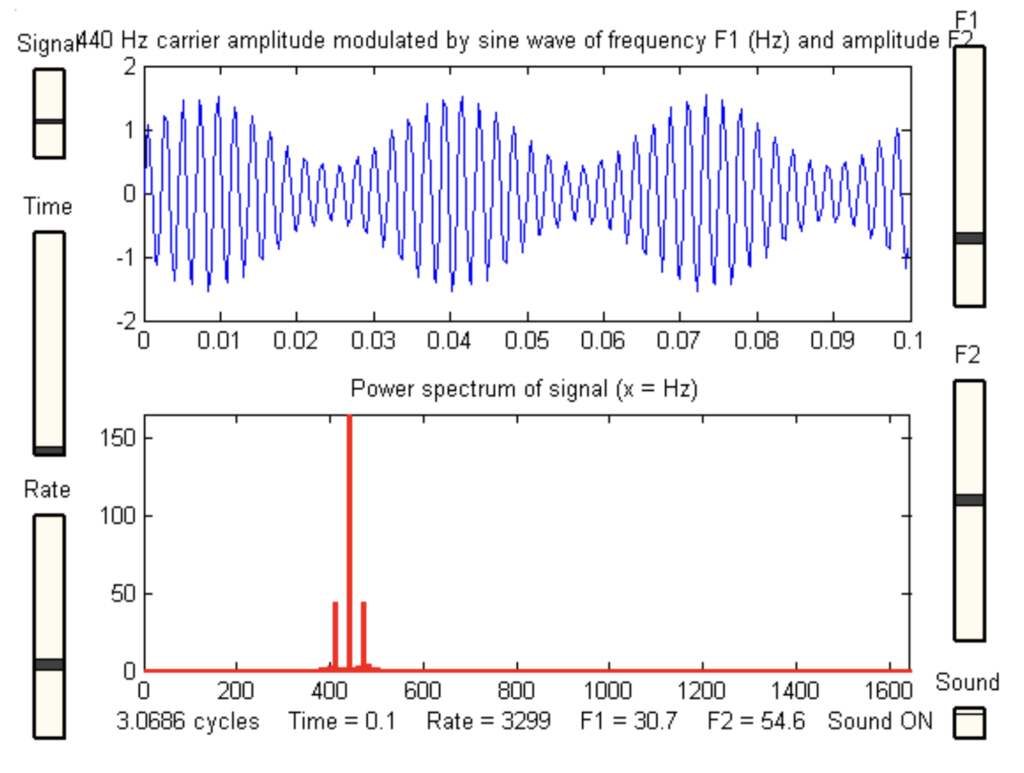

One of the big improvements I implemented to my machine learning model is using something called ✨ spectral analysis ✨

But what is Spectral Analysis? ?

Spectral analysis helps us break down sounds into their different parts. For example, when you hear a song, you can pick out the drums, guitar, and vocals right?

Well, spectral analysis does something similar by looking at the different frequencies in a sound which makes it easier for our model to tell different sounds apart! Woohoo!

Why Use Spectral Analysis? ??♀️

Unlike other methods that only look at how loud a sound is over time, spectral analysis gives us a detailed picture by showing us all the different frequencies! This helps our model recognise and separate sounds that might seem similar at first!

How We Use Spectral Analysis ??♀️

First, we get the sound data ready. This means making the audio signals more uniform and cutting the audio into smaller parts. Then, we use a tool called Fast Fourier Transform (FFT) to change the sound data from a time-based view to a frequency-based view. This lets us see all the different frequencies in the sound. After using FFT, we pick out important details from the frequency data to understand the sound better.

We already use a method called MFCC (Mel-Frequency Cepstral Coefficients – check out my previous blog about it!) to get key features from sounds. By adding spectral analysis to MFCC, we make our model EVEN BETTER at recognising sounds! ?

It is still not perfect, but this has made my machine learning model much better at detecting and differentiating between doom and tak sounds!

In a previous post, I spoke about taking a response from someone describing what their womb would look like if it were a place and turning that into a prompt for AI.

As a refresher here is the prompt:

a photorealistic image of a room which looks like a lush garden inside which is representative of the uterus of someone with endometriosis

And here is the result:

Image Description

AI generated imagery of four hyper realistic photos of an interior design for the dreamy fairy tale bedroom in paradise, pink and green color palette, a lush garden full with flowers and plants surrounding it, magical atmosphere, surrealistic

Honestly, I wasn’t expecting the results to be as close to what was in my mind as this. I was really impressed. I’m intrigued to know which part of these repsponses represents the “endometriosis” section of the AI prompt.

I really like option 3 because the round shape of draped pink fabrics sort of looks like a womb or vaginal opening. It’s definitely my favourite although I’m loving weird abstract furniture that is depicted at the back of option two.

Since I like option three the most, I decided to work on it a bit further.

Image Description

A collage of pink and green floral patterns, vines, and foliage surrounded by lush jungle foliage, with mirrors reflecting in the background. The scene is set at dawn or dusk, creating an ethereal atmosphere. This style blends natural elements with fantasy and surrealism, giving it a dreamy quality in the style of surrealist artists.

Here are examples of subtle variations of the original image

And here are examples of strong variations of the original image.

Image Description

A collage of four images depicting lush greenery, pink drapes, and exotic plants in an opulent setting. The style is a mix between hyper-realistic botanicals and surreal digital art, with vibrant colors and soft lighting creating a dreamy atmosphere. Each element has its own distinct visual narrative within the composition, showcasing a harmonious blend of nature’s beauty and modern aesthetics. This combination creates a visually stunning representation that sparks imagination.

I prefer the strong varations because the garden room looks more overgrown and wild, but still retains it’s beauty which feels closer to what the original response from the participant was saying.

Regardless of my preferences, I think my process will include me using all of these images as previs. I plan to then use 3D software inspired by all of the imagery seen here to create the scene. I’ll take features from all of the images as well as my own ideas that are closer to the original response from the participant to bring their “womb room” to life.

I’ll be honest though, the 3D production is exciting but also scary. I have a bit of imposter syndrome of whether I can actually deliver this. I sort of feel inferior to what Midjourney was able to create.