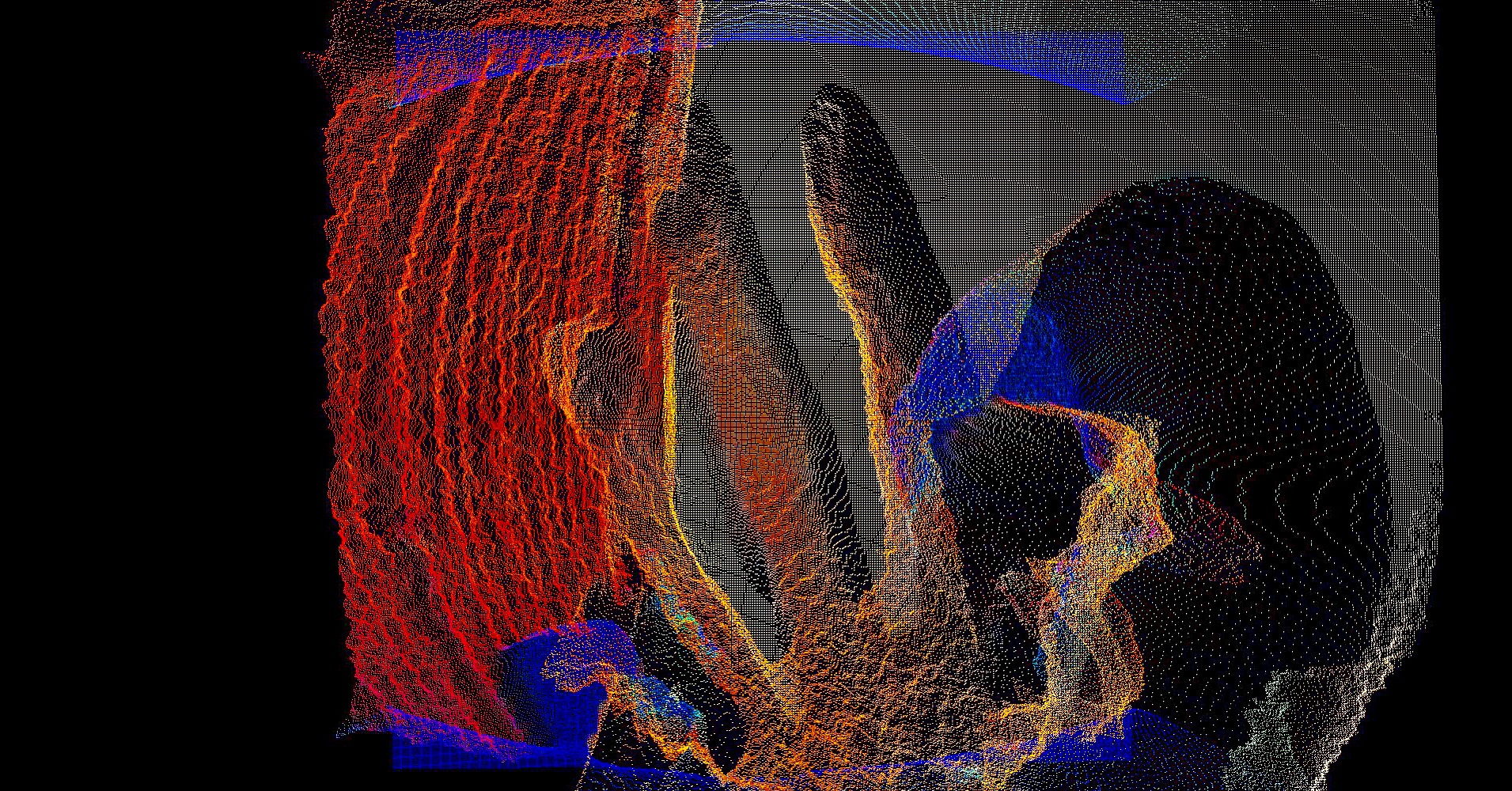

As an artist working with experimental technologies, hacking/re-purposing tools to create artistic works I’m often looking for ways in which I can create intimate shared experiences. Even before the pandemic, a lot of my practice was being conducted solely through computer based interactions due to a lack of funding and other resources. This mode of working allowed me to focus my practice towards making accessible works and I began thinking about the language, technology and context accessibility of my works within a larger contemporary art conversation.

Continue reading “04 ಕಥೆ Kathe (Story) / Dematerialise”