Hey! I’m Jameisha, an artist-filmmaker and writer from South London.

As a disabled artist, I find inspiration in stories about how illness and disability intersect with themes of Black history, pop culture, identity and colonialism. Traditionally, I work with the moving image, however, I am currently expanding my practice to include digital art and immersive technology. My work seeks to explore how art can bridge empathy gaps and give an alternative viewpoint for how we talk about being ill.

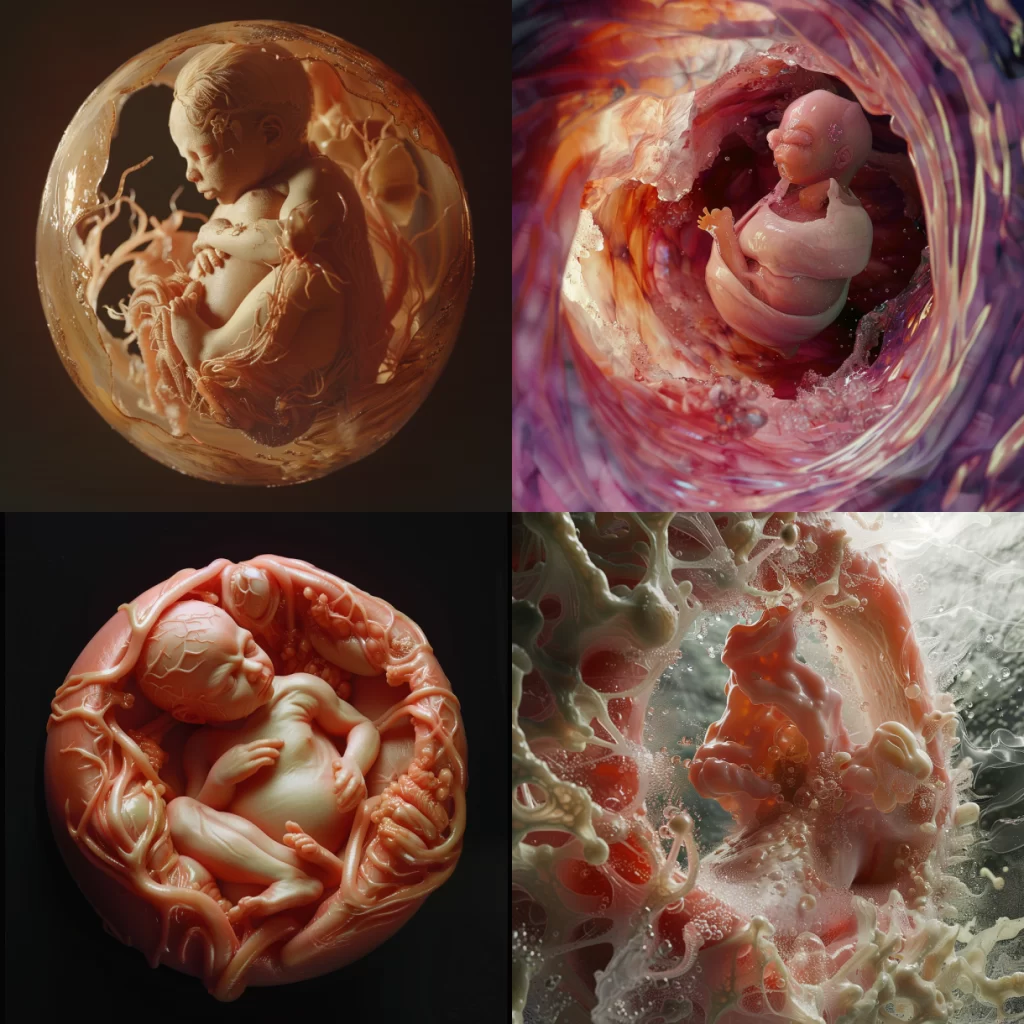

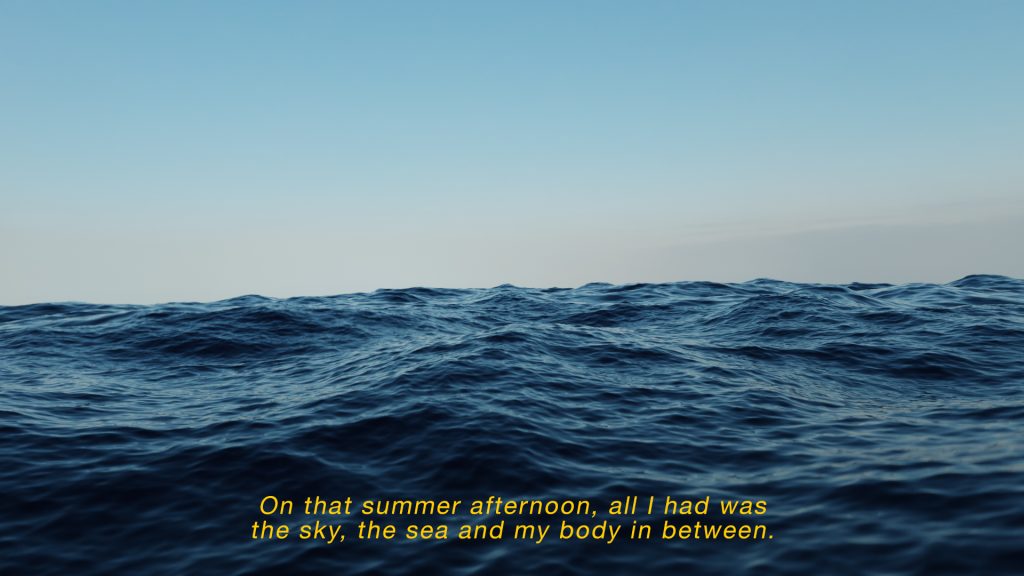

During the residency, I’ll be working on a project called WOMB ROOM. “If your womb were a place you could visit, what would it look like?” Through experimenting with digital art and AI, WOMB ROOM encourages people living with gynaecological conditions to re-imagine what the womb looks like.

Feel free to look around my studio and make yourself at home. Leave any comments or questions about my work. I look forward to reading them.