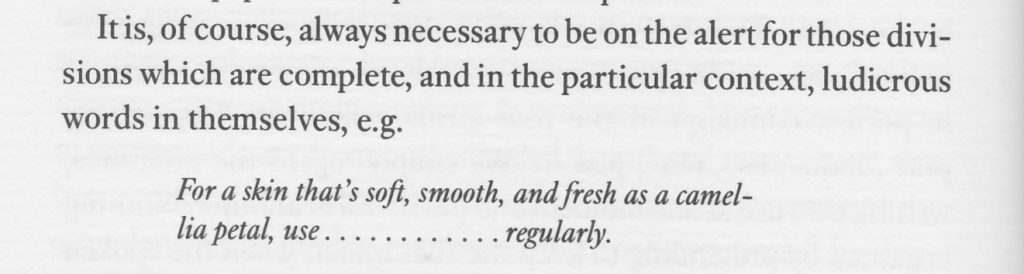

I was taught lipreading before I began using BSL (British Sign Language) and use the two communication methods contiguously. The term “lipreading” is somewhat misleading, as it’s not just lip patterns that contribute to understanding – you pick up information from the rest of the face, from body language, and from the contextual environment (context is something I’ll be picking up on again). My biggest obstacle is dark sunglasses that block the information you see around people’s eyes; if lip patterns provide information on words, the eye area often gives the equivalent of tone of voice, and lipreading people wearing sunglasses translates as a monotone to me.

During 2020, the ratio has been flipped, with masks preventing lipreading, but often leaving the eyes and surrounding areas clear; I’ve started to notice that I still pick up information, so can (sometimes) recognise tone, even if there’s no way to pick up words. I’ve also noticed hearing people struggling with understanding (with masks muffling voices), and am wondering if this will impact on people’s thinking about hearing loss in the longer term. Will their own difficulties lead to more understanding? Will they turn to D/deaf people as communication experts they can learn from?

I’ve been playing with images from the 1986 edition of “Lipreading – A Guide for Beginners”, masking the lip patterns illustrated. I’m planning a duotone risograph print, perhaps using several of these images – due to local lockdown I can only prep my files at present, so anything shown at this stage is an approximation.