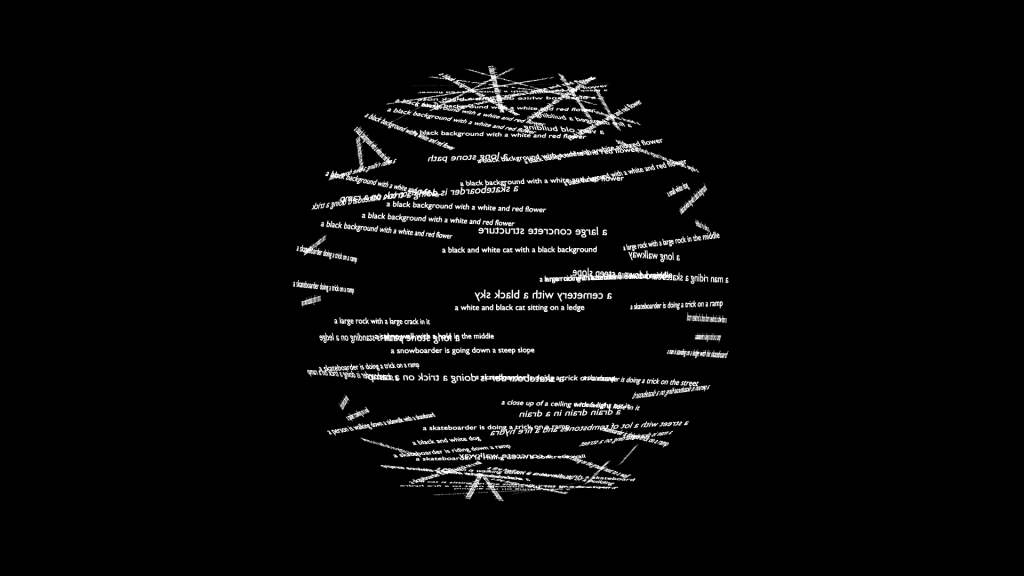

I am revisiting a creative process that has captivated my interest for some time: enabling an agent to perceive and learn about its environment through the lens of a computer vision model. In a previous exploration, I experimented with CLIP (Contrastive Language-Image Pre-Training), which led to the whimsical creation of a sphere composed of text, a visual representation of the model’s understanding. This time, however, my focus shifts to the YOLO (You Only Look Once) model. My prior experiences with YOLO, using the default COCO dataset, often yielded amusingly incorrect object detections—a lamp mistaken for a toilet, or a cup identified as a person’s head. Instead of striving for perfect accuracy, I intend to embrace these algorithmic errors. This project will be a playful exploration of the incorrectness and the fascinating illusions generated by an AI model, turning its faults into a source of creative inspiration.

* visualization using CLIP and Blender for artwork “Golem Wander in Crossroads”

Ultralytics YOLO

Ultralytics YOLO is a family of real-time object detection models renowned for their speed and efficiency. Unlike traditional models that require multiple passes over an image, YOLO processes the entire image in a single pass to identify and locate objects, making it ideal for applications like autonomous driving and video surveillance. The architecture divides an image into a grid, and each grid cell is responsible for predicting bounding boxes and class probabilities for objects centered within it. Over the years, YOLO has evolved through numerous versions, each improving on the speed and accuracy of its predecessors.

(Text from Gemini-2.5-Pro and edited by artist)

CLIP

https://github.com/openai/CLIP

CLIP (Contrastive Language-Image Pre-Training), developed by OpenAI, is a neural network that learns visual concepts from natural language descriptions. It consists of two main components: an image encoder and a text encoder, which are trained jointly on a massive dataset of 400 million image-text pairs from the internet. This allows CLIP to create a shared embedding space where similar images and text descriptions are located close to one another. A key capability of CLIP is “zero-shot” classification, meaning it can classify images into categories it wasn’t explicitly trained on, simply by providing text descriptions of those categories.

(Text from Gemini-2.5-Pro and edited by artist)

COCO

https://docs.ultralytics.com/datasets/detect/coco

COCO (Common Objects in Context), is a large-scale object detection, segmentation, and captioning dataset. It is designed to encourage research on a wide variety of object categories and is commonly used for benchmarking computer vision models. It is an essential dataset for researchers and developers working on object detection, segmentation, and pose estimation tasks.

(Text from Ultralytics YOLO Docs)