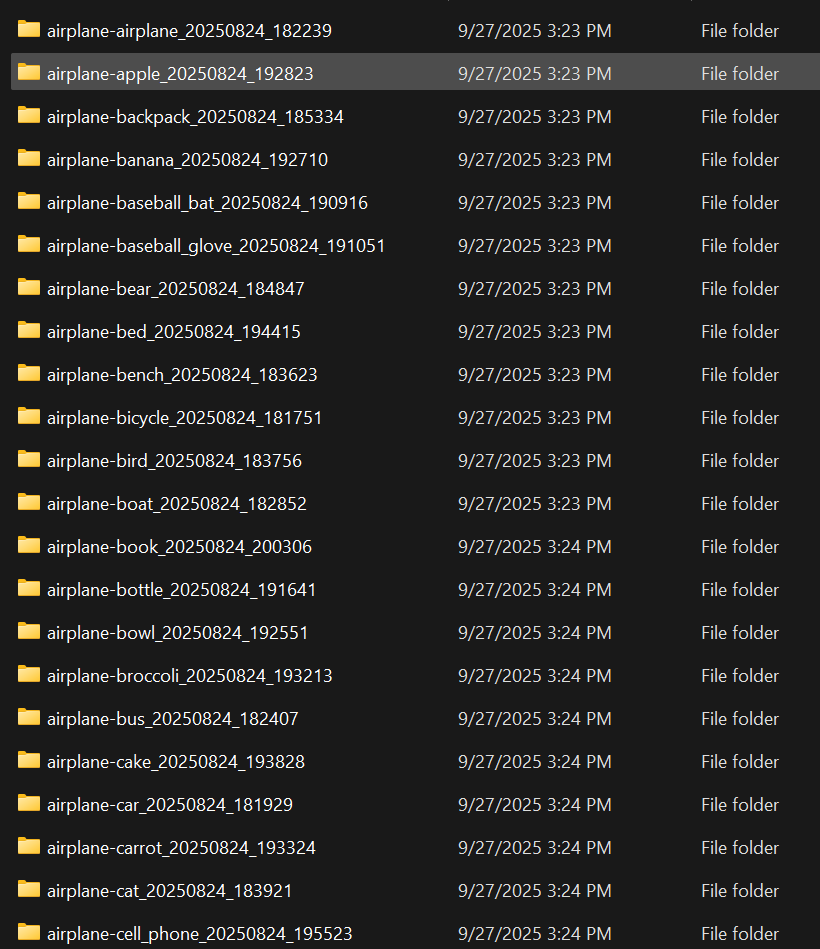

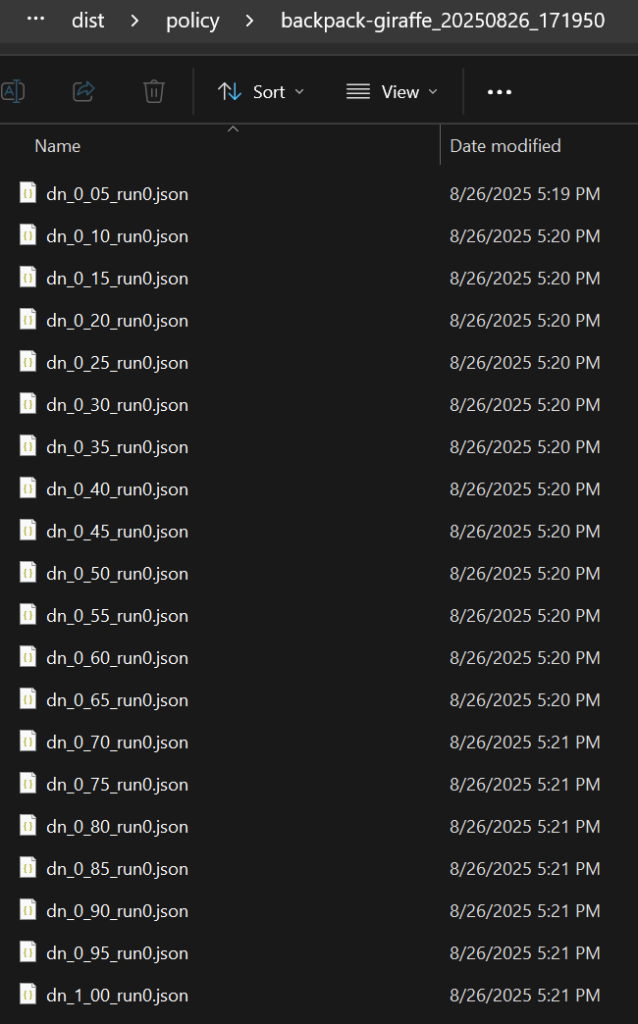

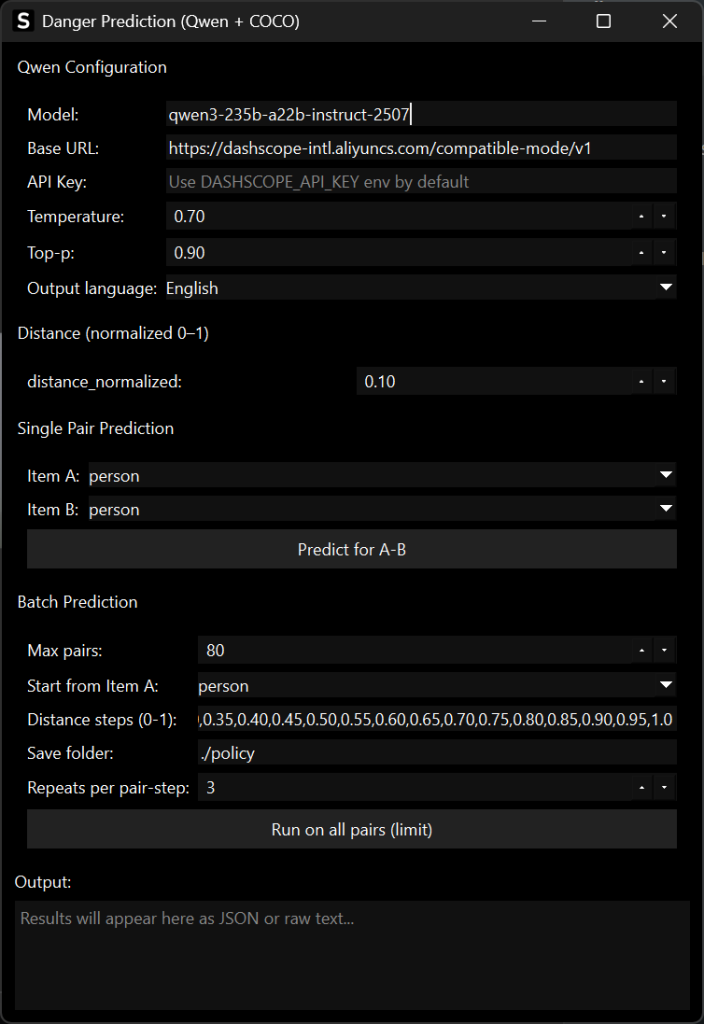

In a more experimental turn, I developed a separate piece of software to explore the concept of emergent behaviour based on the object detection output. This program uses a Large Language Model (LLM) to generate “policies” when objects from the COCO dataset are detected in close proximity on the screen. The system calculates the normalised distance between the bounding boxes of detected objects. This distance value is then fed to the LLM, which has been prompted to generate a policy or rule based on the perceived danger or interaction potential of the objects being close together. For instance, if a “person” and a “car” are detected very close to each other, the LLM might generate a high-alert policy, whereas a “cup” and a “dining table” would result in a benign, functional policy. This creates a dynamic system where the AI is not just identifying objects, but also creating a narrative or a set of rules about their relationships in the environment.